Time Magazine honored Predictive Policing back in 2011 as one of the world’s most promising inventions. Just seven years later it’s become one of the most controversial (and arguably failed) applications of artificial intelligence.

It’s not for lack of positive intentions, though. Daniel Neill, a founder of CrimeScan which uses AI to predict crime areas says:

The idea is to track sparks before a fire breaks out. We look at more minor crimes. Simple assaults could harden to aggravated assaults. Or you might have an escalating pattern of violence between two gangs.

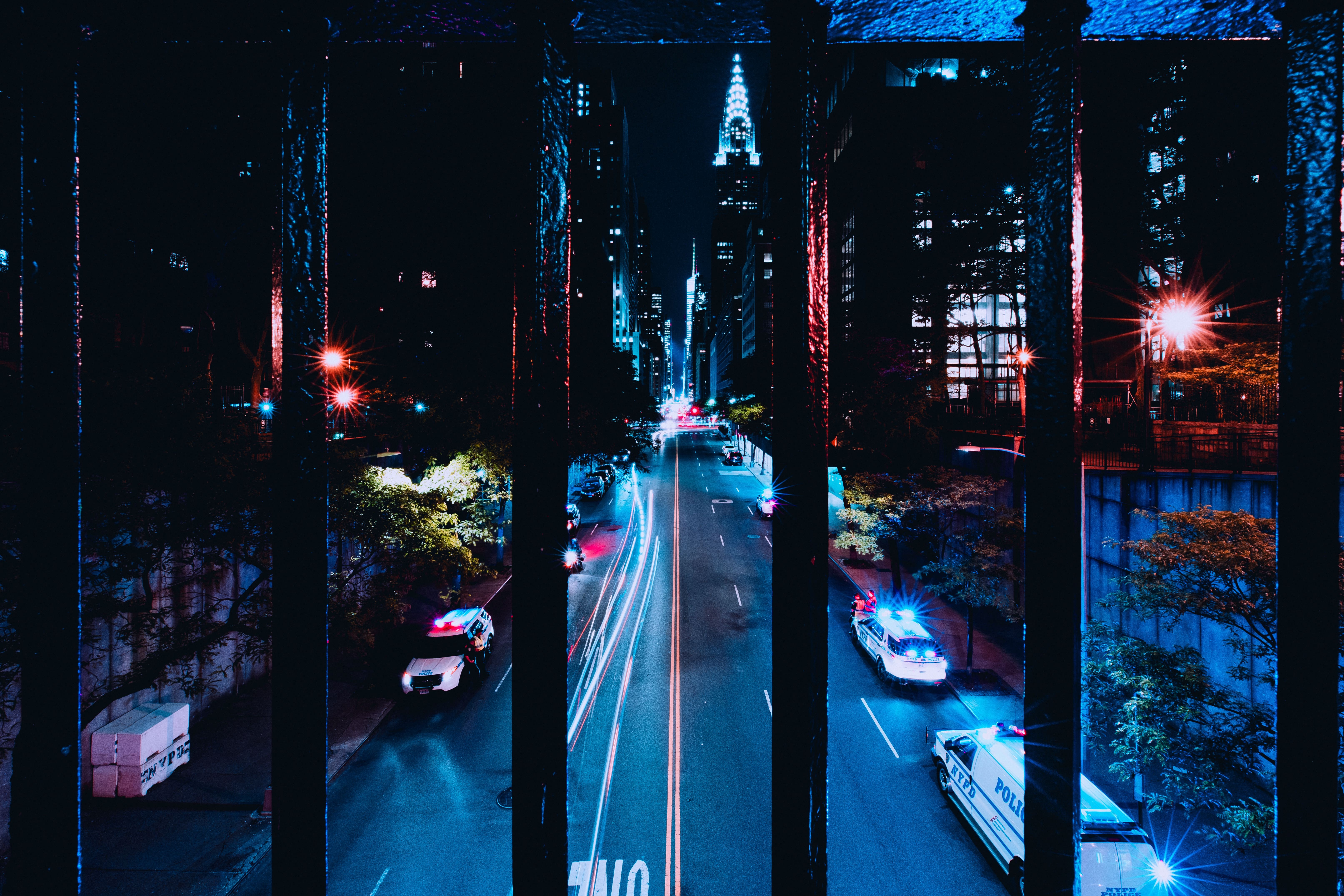

Unfortunately, it turns out that training algorithms to predict where crime will happen next by using historical data really just enforces a negative feedback loop – reflecting and reinforcing attitudes about which neighborhoods are “bad” and which are “good”. In other words, it’s geographically biased.

Today, predictive policing doesn’t accomplish anything more than a “gut feeling”. For instance, I know which areas of my city I shouldn’t walk through late at night. And I’m sure you are aware of the same thing in your city. These racially- and geographically-charged stereotypes are carried over to the AI tools.

That’s why we shouldn’t be surprised that predictive policing is racist in 2018 – it’s built on racist data. But the 2028 vision for predictive policing has the opportunity to change this.

I admire companies such as Hunch Lab which try to alleviate this bias by using other crime indicators to project where random crime spikes may occur. Taking into account locations such as bars and subways, cross-referencing with the weather and sporting events, they add many variables together which could precipitate into a crime.

Eventually, they might add a layer of user-generated data, eye-witness accounts, suspicious activity, etc. (kind of like Waze did for traffic maps). Then we’ll begin to experience predictive policing that really is more effective.

We’ll likely feel effects similar to when we were younger and your sibling was about to